The current generation of Wi-Fi, 802.11n, is soon to be superseded by not one, but two new flavors of Wi-Fi:

802.11n claims a maximum raw data rate of 600 megabits per second. 802.11ac and WiGig each claims over ten times this – about 7 megabits per second. So why do we need them both? The answer is that they have different strengths and weaknesses, and to some extent they can fill in for each other, and while they are useful for some of the same things, they each have use cases for which they are superior.

The primary difference between them is the spectrum they use. 802.11ac uses 5 GHz, WiGig uses 60 GHz. There are four main consequences of this difference in spectrum:

- Available bandwidth

- Propagation distance

- Crowding

- Antenna size

Available bandwidth:

There are no free lunches in physics. The maximum bit-rate of a wireless channel is limited by its bandwidth (i.e. the amount of spectrum allocated to it – 40 MHz in the case of old-style 802.11n Wi-Fi), and the signal-to-noise ratio. This limit is given by the Shannon-Hartley Theorem. So if you want to increase the speed of Wi-Fi you either have to allocate more spectrum, or improve the signal-to-noise ratio.

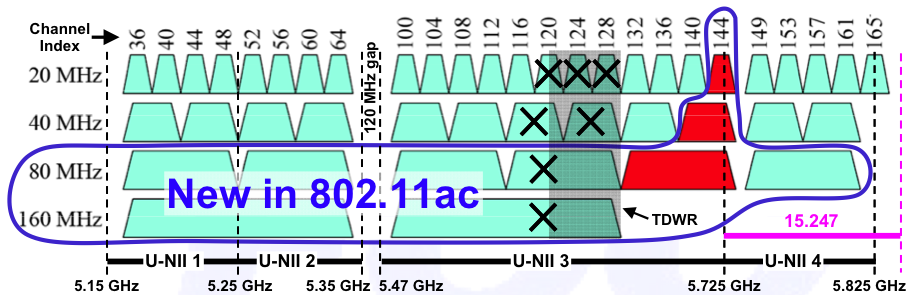

The amount of spectrum available to Wi-Fi is regulated by the FCC. At 5 GHz, Wi-Fi can use 0.55 GHz of spectrum. At 60 GHz, Wi-Fi can use 7 GHz of spectrum – over ten times as much. 802.11ac divides its spectrum into five 80 MHz channels, which can be optionally bonded into two and a half 160 MHz channels, as shown in this FCC graphic:

802.11ad has it much easier:

“Worldwide, the 60 GHz band has much more spectrum available than the 2.4 GHz and 5 GHz bands – typically 7 GHz of spectrum, compared with 83.5 MHz in the 2.4 GHz band.

This spectrum is divided into multiple channels, as in the 2.4 GHz and 5 GHz bands. Because the 60 GHz band has much more spectrum available, the channels are much wider, enabling multi-gigabit data rates. The WiGig specification defines four channels, each 2.16 GHz wide – 50 times wider than the channels available in 802.11n.”Source: Wireless Gigabit Alliance

So for the maximum claimed data rates, 802.11ac uses channels 160 MHz wide, while WiGig uses 2,160 MHz per channel. That’s almost fourteen times as much, which makes life a lot easier for the engineers.

Propagation Distance:

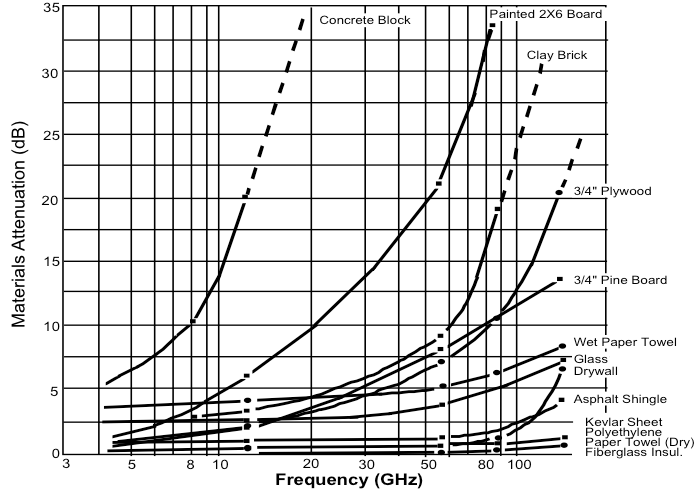

60 GHz radio waves are absorbed by oxygen, but for LAN-scale distances this is not a significant factor. On the other hand, wood, bricks and particularly paint are far more opaque to 60 GHz waves:

Consequently WiGig is most suitable for in-room applications. The usual example for this is streaming high-def movies from your phone to your TV, but for their leading use case WiGig proponents have selected an even shorter range application: wireless docking for laptops.

Crowding:

5 GHz spectrum is also used by weather radar (Terminal Doppler Weather Radar or TDWR), and the FCC recently ruled that part of the 5 GHz spectrum is now completely prohibited to Wi-Fi. These channels are marked “X” in the graphic above. All the channels in the graphic designated “U-NII2” and “U-NII 3” are also subject to a requirement called “Dynamic Frequency Selection” or DFS, which says that if activity is detected at that frequency the Wi-Fi device must not use it.

5 GHz spectrum is not nearly as crowded as the 2.4 GHz spectrum used by most Wi-Fi, but it’s still crowded and cramped compared to the wide open vistas of 60 GHz. Even better, the poor propagation of 60 GHz waves means that even nearby transmitters are unlikely to cause interference. And with with beam-forming (discussed in the next section), even transmitters in the same room cause less interference. So WiGig wins on crowding in multiple ways.

Antenna size:

Antenna size and spacing is proportional to the wavelength of the spectrum being used. This means that 5 GHz antennas would be about an inch long and spaced about an inch apart. At 60 GHz, the antenna size and spacing would be about a tenth of this! So for handsets, multiple antennas are trivial to do at 60 GHz, more challenging at 5 GHz.

What’s so great about having multiple antennas? I mentioned earlier that there are no free lunches in physics, and that maximum bit-rate depends on channel bandwidth and signal to noise ratio. That’s how it used to be. Then in the mid-1990s engineers discovered (invented?) an incredible, wonderful free lunch: MIMO. Two adjacent antennas transmitting different signals on the same frequency normally interfere with each other. But the MIMO discovery was that if in this situation you also have two antennas on the receiver, and if there are enough things in the vicinity for the radio waves to bounce off (like walls, floors, ceilings, furniture and so on), then you can take the jumbled-up signals at the receiver, and with enough mathematical computer horsepower you can disentangle the signals completely, as if you had sent them on two different channels. And there’s no need to stop at two antennas. With four antennas on the transmitter and four on the receiver, you get four times the throughput. With eight, eight times. Multiple antennas receiving, multiple antennas sending. Multiple In, Multiple Out: MIMO. This kind of MIMO is called Spatial Multiplexing, and it is used in 802.11n and 802.11ac.

Another way multiple antennas can be used is “beam-forming.” This is where the same signal is sent from each antenna in an array, but at slightly different times. This causes interference patterns between the signals, which (with a lot of computation) can be arranged in such a way that the signals reinforce each other in a particular direction. This is great for WiGig, because it can easily have as many as 16 of its tiny antennas in a single array, even in a phone, and that many antennas can focus the beam tightly. Even better, shorter wavelengths tend to stay focused. So most of the transmission power can be aimed directly at the receiving device. So for a given power budget the signal can travel a lot further, or for a given distance the transmission power can be greatly reduced.